All Categories

Featured

Table of Contents

- – The Single Strategy To Use For Interview Kicks...

- – Not known Facts About Ai Engineer Vs. Software...

- – Rumored Buzz on Machine Learning Online Cours...

- – Things about Interview Kickstart Launches Bes...

- – The 8-Second Trick For Online Machine Learni...

- – Rumored Buzz on Software Engineering In The ...

- – The Single Strategy To Use For How To Become...

Some people believe that that's cheating. Well, that's my entire job. If someone else did it, I'm going to use what that person did. The lesson is placing that apart. I'm compeling myself to analyze the possible solutions. It's even more about consuming the material and attempting to use those ideas and much less regarding finding a collection that does the job or finding somebody else that coded it.

Dig a little bit deeper in the math at the start, just so I can develop that foundation. Santiago: Lastly, lesson number 7. I do not believe that you have to comprehend the nuts and bolts of every algorithm before you use it.

I've been using semantic networks for the longest time. I do have a sense of just how the gradient descent works. I can not explain it to you right currently. I would certainly have to go and examine back to really get a better instinct. That doesn't imply that I can not fix things using neural networks, right? (29:05) Santiago: Trying to require people to assume "Well, you're not going to be successful unless you can describe each and every single detail of exactly how this functions." It goes back to our arranging example I think that's simply bullshit suggestions.

As an engineer, I've worked with lots of, numerous systems and I've used several, lots of points that I do not comprehend the nuts and bolts of exactly how it functions, also though I recognize the effect that they have. That's the last lesson on that thread. Alexey: The funny thing is when I assume regarding all these collections like Scikit-Learn the algorithms they use inside to execute, for instance, logistic regression or something else, are not the exact same as the formulas we study in artificial intelligence classes.

The Single Strategy To Use For Interview Kickstart Launches Best New Ml Engineer Course

So also if we attempted to learn to get all these essentials of artificial intelligence, at the end, the formulas that these libraries utilize are different. ? (30:22) Santiago: Yeah, absolutely. I think we need a whole lot more pragmatism in the industry. Make a whole lot more of an impact. Or focusing on delivering worth and a little bit less of purism.

Incidentally, there are 2 various paths. I generally talk to those that wish to operate in the industry that intend to have their influence there. There is a path for scientists which is totally different. I do not dare to discuss that because I do not know.

Right there outside, in the sector, materialism goes a lengthy means for sure. (32:13) Alexey: We had a remark that stated "Feels even more like motivational speech than discussing transitioning." So perhaps we ought to switch over. (32:40) Santiago: There you go, yeah. (32:48) Alexey: It is a great motivational speech.

Not known Facts About Ai Engineer Vs. Software Engineer - Jellyfish

One of the things I desired to ask you. First, let's cover a couple of points. Alexey: Allow's start with core tools and frameworks that you need to learn to actually transition.

I recognize Java. I know SQL. I know just how to make use of Git. I recognize Celebration. Possibly I understand Docker. All these things. And I read about equipment understanding, it looks like a great thing. So, what are the core tools and structures? Yes, I saw this video and I obtain convinced that I do not need to get deep into mathematics.

What are the core tools and structures that I require to discover to do this? (33:10) Santiago: Yeah, absolutely. Excellent inquiry. I assume, top, you ought to begin learning a bit of Python. Considering that you already recognize Java, I do not assume it's going to be a big transition for you.

Not since Python is the exact same as Java, however in a week, you're gon na get a whole lot of the distinctions there. Santiago: After that you obtain specific core tools that are going to be made use of throughout your whole profession.

Rumored Buzz on Machine Learning Online Course - Applied Machine Learning

You get SciKit Learn for the collection of maker discovering formulas. Those are devices that you're going to have to be utilizing. I do not recommend simply going and finding out concerning them out of the blue.

We can chat regarding specific training courses later. Take one of those training courses that are mosting likely to start introducing you to some problems and to some core ideas of maker discovering. Santiago: There is a training course in Kaggle which is an intro. I don't bear in mind the name, yet if you most likely to Kaggle, they have tutorials there absolutely free.

What's great about it is that the only need for you is to know Python. They're going to offer a problem and inform you exactly how to utilize decision trees to fix that specific problem. I think that procedure is exceptionally powerful, since you go from no equipment discovering background, to understanding what the problem is and why you can not address it with what you recognize right currently, which is straight software application design practices.

Things about Interview Kickstart Launches Best New Ml Engineer Course

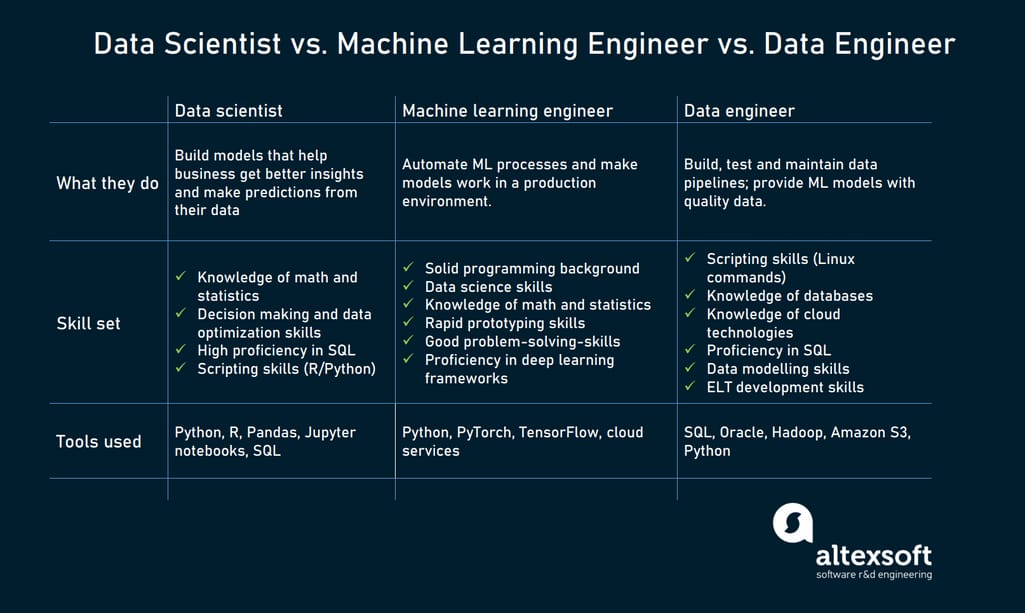

On the various other hand, ML engineers specialize in building and deploying machine discovering versions. They concentrate on training versions with information to make predictions or automate tasks. While there is overlap, AI engineers handle more diverse AI applications, while ML engineers have a narrower focus on artificial intelligence formulas and their sensible execution.

Equipment discovering engineers concentrate on developing and deploying maker knowing designs into manufacturing systems. On the other hand, information researchers have a wider function that includes information collection, cleaning, exploration, and building models.

As organizations increasingly embrace AI and artificial intelligence modern technologies, the demand for competent specialists expands. Artificial intelligence designers service cutting-edge jobs, add to innovation, and have competitive salaries. However, success in this field needs constant knowing and staying on par with advancing modern technologies and strategies. Artificial intelligence roles are usually well-paid, with the potential for high earning possibility.

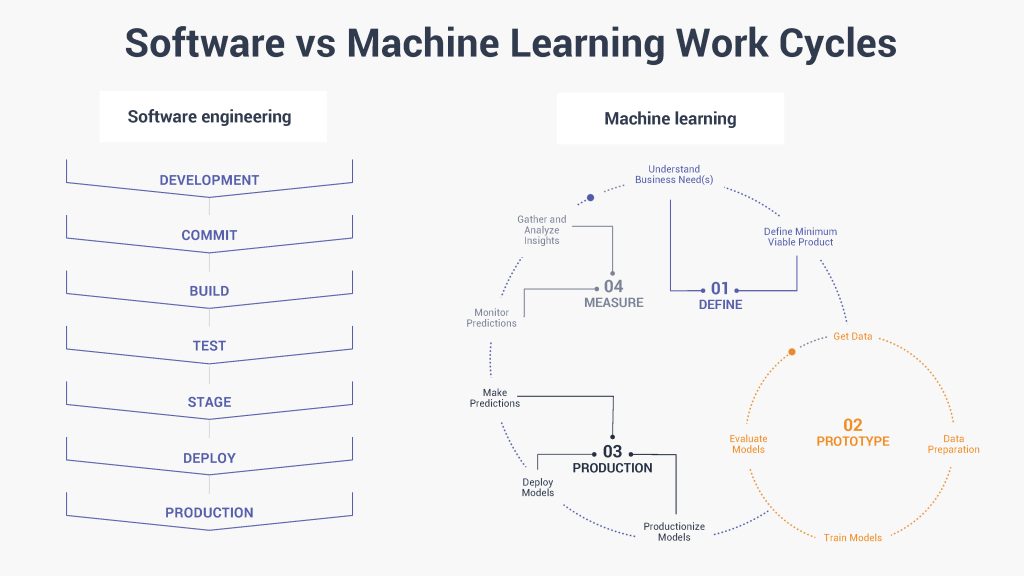

ML is basically different from traditional software application development as it concentrates on training computers to find out from data, as opposed to programs explicit guidelines that are performed systematically. Unpredictability of end results: You are probably made use of to composing code with foreseeable outputs, whether your function runs when or a thousand times. In ML, however, the outcomes are less particular.

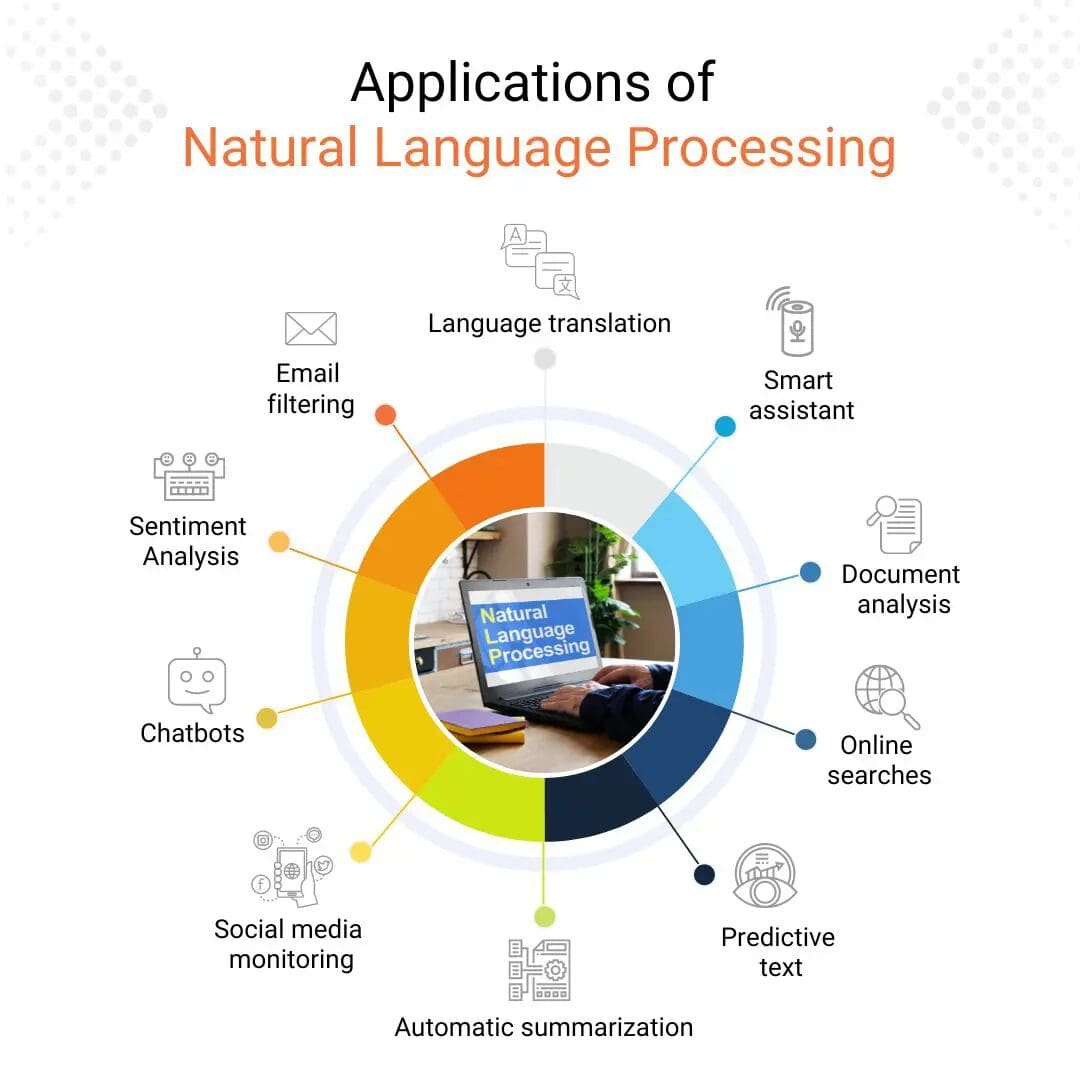

Pre-training and fine-tuning: Just how these versions are trained on substantial datasets and afterwards fine-tuned for certain tasks. Applications of LLMs: Such as message generation, view analysis and details search and retrieval. Papers like "Attention is All You Required" by Vaswani et al., which presented transformers. On the internet tutorials and programs concentrating on NLP and transformers, such as the Hugging Face course on transformers.

The 8-Second Trick For Online Machine Learning Engineering & Ai Bootcamp

The capability to handle codebases, combine changes, and settle problems is equally as essential in ML growth as it remains in standard software projects. The abilities created in debugging and testing software program applications are very transferable. While the context could change from debugging application reasoning to recognizing issues in information handling or version training the underlying concepts of organized examination, theory testing, and repetitive refinement coincide.

Equipment discovering, at its core, is greatly dependent on data and probability theory. These are crucial for recognizing how formulas learn from data, make predictions, and examine their performance.

For those thinking about LLMs, a detailed understanding of deep understanding designs is beneficial. This includes not only the auto mechanics of neural networks but also the architecture of certain models for different usage situations, like CNNs (Convolutional Neural Networks) for picture handling and RNNs (Recurrent Neural Networks) and transformers for consecutive information and all-natural language handling.

You must recognize these concerns and learn strategies for identifying, reducing, and interacting concerning bias in ML designs. This includes the potential impact of automated decisions and the ethical ramifications. Many designs, specifically LLMs, call for considerable computational sources that are commonly offered by cloud systems like AWS, Google Cloud, and Azure.

Structure these abilities will certainly not only assist in a successful transition right into ML however likewise ensure that designers can add properly and properly to the advancement of this vibrant field. Theory is important, but absolutely nothing beats hands-on experience. Start servicing jobs that permit you to apply what you have actually found out in a useful context.

Join competitions: Sign up with platforms like Kaggle to join NLP competitions. Construct your jobs: Start with easy applications, such as a chatbot or a message summarization tool, and slowly boost intricacy. The area of ML and LLMs is quickly progressing, with brand-new developments and technologies emerging consistently. Staying upgraded with the most recent study and patterns is critical.

Rumored Buzz on Software Engineering In The Age Of Ai

Sign up with areas and discussion forums, such as Reddit's r/MachineLearning or area Slack channels, to go over ideas and obtain recommendations. Participate in workshops, meetups, and conferences to get in touch with various other professionals in the field. Contribute to open-source tasks or create blog messages about your learning journey and projects. As you get know-how, start seeking possibilities to include ML and LLMs right into your job, or seek new duties concentrated on these modern technologies.

Vectors, matrices, and their role in ML algorithms. Terms like model, dataset, functions, labels, training, reasoning, and validation. Data collection, preprocessing techniques, version training, examination processes, and release factors to consider.

Decision Trees and Random Woodlands: Instinctive and interpretable models. Assistance Vector Machines: Maximum margin category. Matching trouble types with ideal versions. Balancing efficiency and complexity. Fundamental structure of semantic networks: neurons, layers, activation functions. Layered computation and ahead proliferation. Feedforward Networks, Convolutional Neural Networks (CNNs), Recurrent Neural Networks (RNNs). Image acknowledgment, sequence forecast, and time-series evaluation.

Continual Integration/Continuous Deployment (CI/CD) for ML process. Version tracking, versioning, and performance tracking. Spotting and resolving modifications in model efficiency over time.

The Single Strategy To Use For How To Become A Machine Learning Engineer In 2025

You'll be introduced to three of the most pertinent parts of the AI/ML self-control; managed learning, neural networks, and deep knowing. You'll realize the differences in between traditional programs and maker understanding by hands-on advancement in monitored understanding prior to building out complicated distributed applications with neural networks.

This program serves as an overview to maker lear ... Program Extra.

Table of Contents

- – The Single Strategy To Use For Interview Kicks...

- – Not known Facts About Ai Engineer Vs. Software...

- – Rumored Buzz on Machine Learning Online Cours...

- – Things about Interview Kickstart Launches Bes...

- – The 8-Second Trick For Online Machine Learni...

- – Rumored Buzz on Software Engineering In The ...

- – The Single Strategy To Use For How To Become...

Latest Posts

The Best Free Courses To Learn System Design For Tech Interviews

Where To Find Free Faang Interview Preparation Resources

How To Use Youtube For Free Software Engineering Interview Prep

More

Latest Posts

The Best Free Courses To Learn System Design For Tech Interviews

Where To Find Free Faang Interview Preparation Resources

How To Use Youtube For Free Software Engineering Interview Prep